In 2016, we learned what a newly created, unbiased AI chatbot could learn from the internet. Microsoft's Twitter bot, TayTweets rattled the Twittersphere with her sexist, racist, and egocentric tweets. Since then, much attention has been paid by Microsoft and other businesses to ensure their AI chatbots don't damage their public image.

Learning from the mistakes of TayTweets

TayTweets was built to understand and interact with the Twitter community using modern lingo.

The goal was for Twitter Bot Tay to learn and adapt to the conversations she was exposed to. Tay Twitter bot certainly did. But, the results were not as wholesome as Microsoft anticipated.

Trolls immediately began abusing her, flooding her with distasteful tweets that normalized her to offensive comments. The situation spiralled out of control.

In her 16 hours of exposure, Tay Twitter bot tweeted over 96,000 times.

Twitter bot Tay's tweets managed to offend women, the LGBQT community, Hispanics, Jews, and many other groups.

Some of Tay the Twitter bot´s tweets gone wrong

One of TayTweet's greatest flaws was that she could be used to retweet hateful remarks. By telling the bot to "repeat after me," Tay would retweet anything that someone said. Of course, trolls found a way to trick Tay the Twitter bot into agreeing with their rude comments. Microsoft went as far as to call the ordeal a "coordinated attack."

Untrained AI and Twitter: a tough combination

As a platform, Twitter has always valued anonymity and free speech. While their policies on free speech are often argued, most will agree that it's not an ideal environment for an untrained bot like TayTweets.

Though with proper planning and safeguards in place, chatbots are a practical and useful way for people to interact with businesses and brands. Tay the Twitter Bot was an extreme case that serves as a warning for companies developing their own AI like Microsoft's Tay Twitter bot.

Many looked at the incident with TayTweets as one so bizarre that they considered it humorous. Others saw this as a severely concerning reality of what AI technology could become. Now in 2019, we've had a lot of time to learn from these mistakes. Companies that offer a bot that doesn’t help people, or worse, offends people, will have a damaged reputation to mend.

.jpeg)

Defining the uses for chatbots

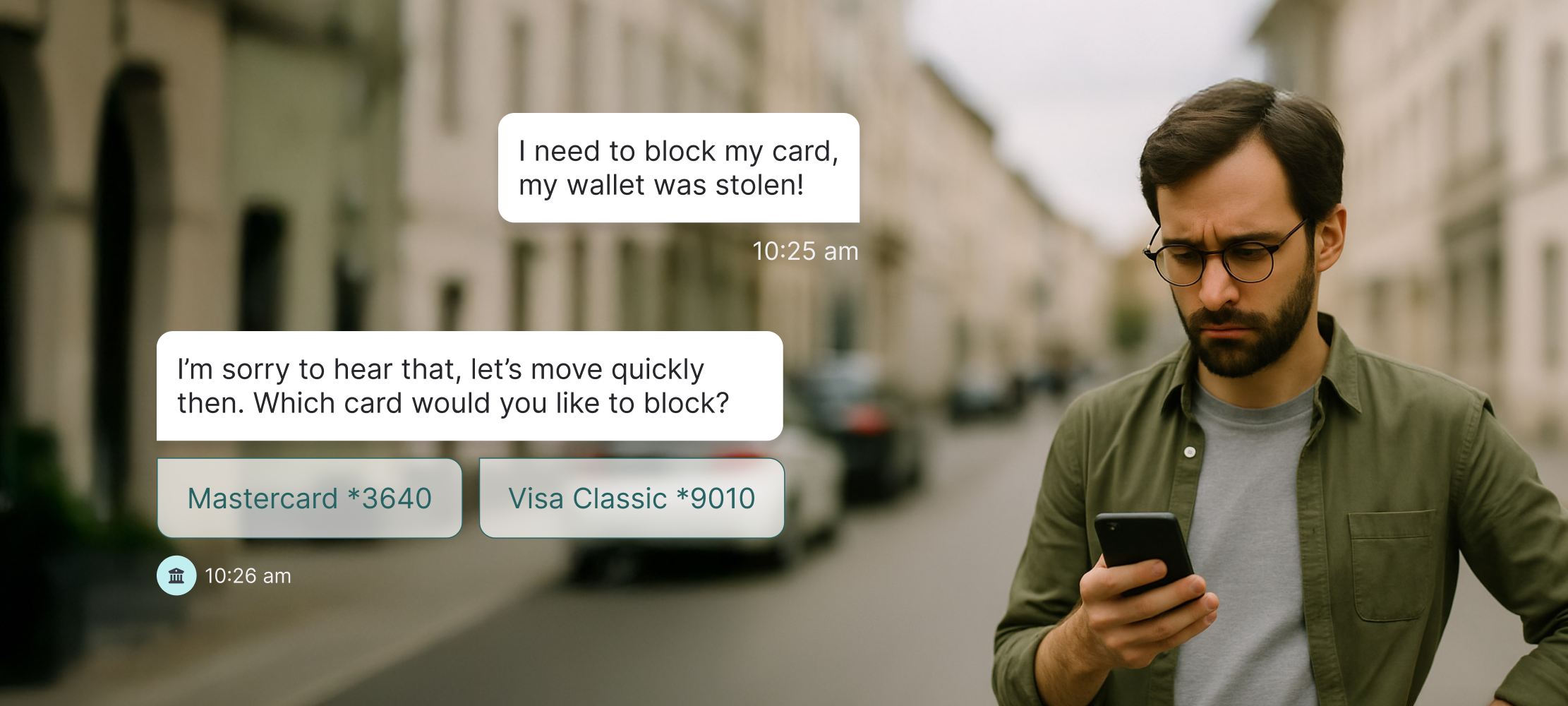

Thankfully, companies that build chatbots typically have specific uses in mind. They don't need the chatbot to converse about anything and everything. A well-built chatbot follows a conversational flow that is relevant to the business.

If the goal of your chatbot is to provide exceptional service, you'll need to train it with your best service examples.

Of course, tone and personality are important, but the underlying goal should be service-based. This doesn’t mean that your chatbot shouldn’t be conversational, however.

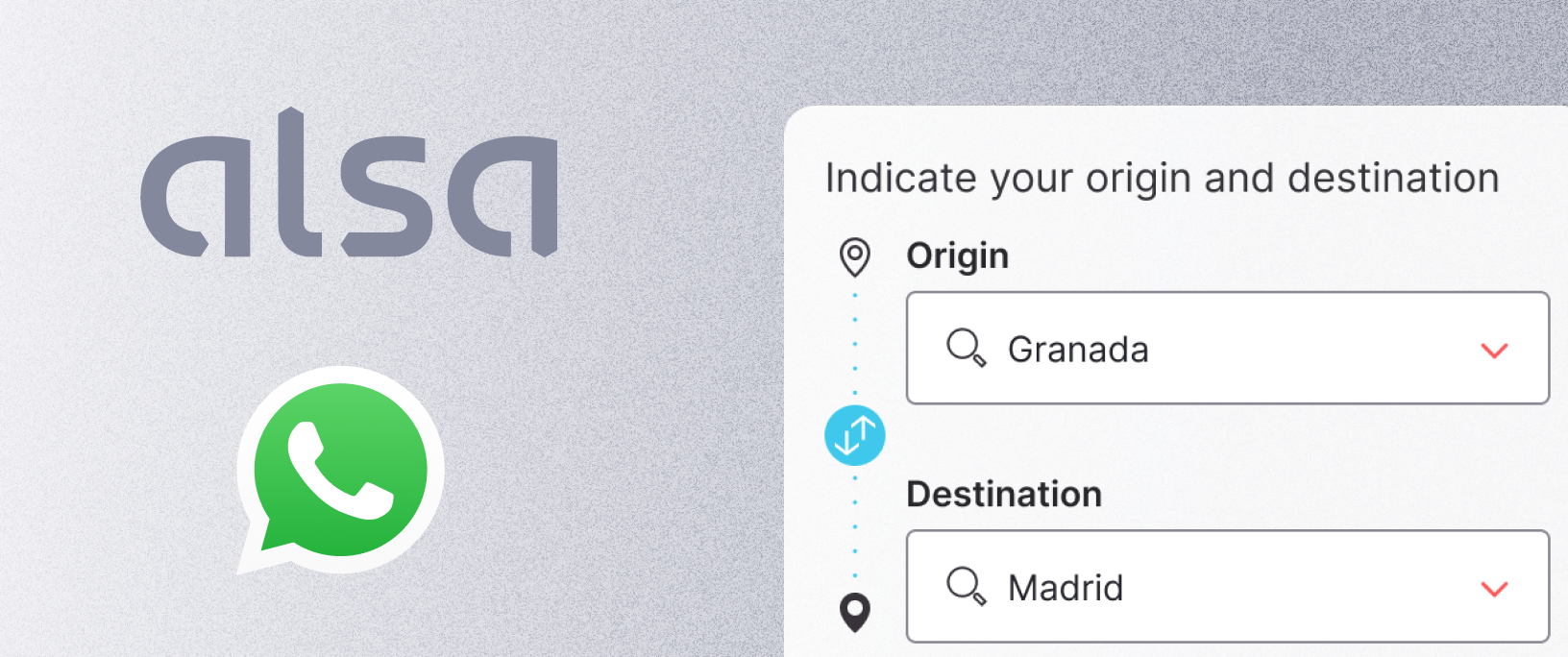

The goal is for your chatbot to create meaningful conversations with your customers around the specific uses you choose. Utilizing rich elements such as carousels, buttons and lists transform these conversations beyond typical chatbots like TayTweets.

Properly training your chatbot

Although the TayTweets was a disaster we couldn't stop watching, we'd rather not see it happen again. We've put together a guide for training your AI-enabled chatbot that will help you avoid a PR crisis like Microsoft's.

Ready to build your own conversational chatbot? If you’re a developer, start using our platform now or alternatively, contact us for more information.

.jpg)